THE EYE - MATRIXED

| Y | − | 0 | + | B | ||

| G | G | |||||

| + | + | |||||

| 0 | 0 | |||||

| − | − | |||||

| R | R | |||||

| Y | − | 0 | + | B |

The eye has sensors (cones) for the three primary colors of light (red, green, blue), plus another one (rod) for night.

The eye uses a matrix system to encode color (first diagram, right). It has three outputs:

- The Luminance (LUM) channel sends the detected brightness to the brain. It sums all of the signals.

- The Blue-Yellow (B-Y) differential channel sends the difference between the blue level and the yellow level.

- The Green-Red (G-RY) differential channel sends the difference between the green level and the red level.

This is done for each pixel in in the central vision area.

One or both of the differential encoders is omitted in pixels away from central vision.

A yellow signal is made by summing the red and green signals.

Nerve signals use a form of frequency modulation, so they do not saturate.

Each differential channel compares the frequencies of the color inputs.

- The comparator compares the frequencies of the color inputs.

- The comparator has a center frequency that is output with white light or no light.

- One input increases the output frequency of the comparator. The other input decreases the frequency.

- The color seen is the same with bright light or dim light.

- The signal from the rods is not sent to the comparators.

Cone pigments take time to replenish. Cones temporarily lose a little sensitivity after light exposure. This causes afterimages.

The iris automatically opens and closes to optimize light levels.

There is a matrix interpreter for each pixel sent from the eye.

The matrix interpreter (in the brain) finds what color is being seen.

- The Luminance (LUM) channel is used to produce the main image for recognition.

- The Blue-Yellow (B-Y) differential channel and the Green-Red (G-R) differential channel together find the color.

- The color is determined according on a 2-axis basis according to the diagram at right (but with many more colors).

In bright light, color information is retained.

Time exposures are impossible.

THE CAMERA (FILM OR DIGITAL) - DISCRETE

The camera has sensors for the three primary colors of light (red, green, blue), in each pixel.

The camera uses a discrete system to encode color. It has three outputs:

- Red pixel

- Green pixel

- Blue pixel

This is done for each pixel in in the entire image area.

Each pixel acts independently to all of the other pixels.

Camera pixels accumulate the amplitude of the light.

The lens opening and shutter speed adjustments affect all three colors equally.

Pixels saturate with too much light exposure.

If all three color pixels saturate, color information is lost and the image turns white there.

Time exposures are used to build up light.

Some camera light sensors also pick up light outside the visual range.

I saw a beautiful sunset with a big red sun (left), so I photographed it.

When the film was developed, the image showed a white sun that was not so big.

I decided to find out why.

THE EYE

The matrix color system in the eye preserves the ratios of the 3 primary colors.

Even though the sun is bright, it still appears red to the eye.

Since cameras can't simulate what eyes do, the photo is edited to show what the eye sees (left).

The eye is subject to the horizon illusion that makes the sun and the moon look bigger on the horizon.

THE CAMERA

The discrete pixels are all saturated with the light from the sun.

The saturated pixels make the sun white.

The overexposed image of the sun appears to be white in the photo (right).

The camera is not fooled by the horizon illusion.

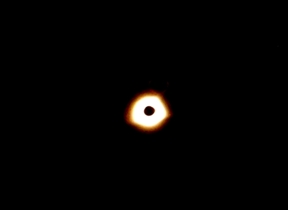

I saw two total eclipses of the sun and tried to photograph them. This is the 2017 eclipse.

When the digital images were viewed, the images showed a much bigger corona than was seen visually.

The colors were also different in the images from the colors seen visually.

I decided to find out why.

THE EYE (left)

The matrix color system in the eye preserves the ratios of the 3 primary colors.

The retina of the eye does not accumulate light over time as a camera does.

Since cameras can't simulate what eyes do, the photo is edited to show what the eye sees (left).

I enlarged the photo that was altered to look like what was visually seen.

THE CAMERA (right)

The discrete pixels are all saturated with the light from the sun.

The saturated pixels make the corona white and overexpose it.

Scattering and light too faint for the eye make the corona image oversize and the moon image undersize.

The corona edge color is different because the part rendered red is farther from the sun than the visible.

The overexposed image of the sun appears to be white in the photo (right).

I saw two total eclipses of the sun and tried to photograph them. This is the 2024 eclipse.

When the digital images were viewed, the images showed a much bigger corona than was seen visually.

The corona was asymmetrical visually and in the photos.

The colors were also different in the images from the colors seen visually.

I now know why.

THE EYE (left)

The matrix color system in the eye preserves the ratios of the 3 primary colors.

The retina of the eye does not accumulate light over time as a camera does.

Since cameras can't simulate what eyes do, the photo is edited to show what the eye sees (left).

I enlarged the photo that was altered to look like what was visually seen.

I had to mess with color saturation to get the prominence (red).

THE CAMERA (right)

The discrete pixels are all saturated with the light from the sun.

The saturated pixels make the corona white and overexpose it.

Scattering and light too faint for the eye make the corona image oversize and the moon image undersize.

The corona edge color is different in different directions is a scattering effect inside the camera.

The overexposed image of the sun appears to be white in the photo (right).

The bottom photo was on the TV and also shows the asymmetric corona.